As is typical, when I get an idea for a new post, I’m bumbling around the internet, mindlessly consuming content, when some bullshit jumps out and presses my buttons. Today it was a conversation on the r/programming subreddit.

Microsoft is fighting a class action lawsuit regarding GitHub’s Copilot. Copilot is like ChatGPT for software engineers: you describe the code you want, and the tool spits out something that might work. GitHub used its privileged position as a repository for millions of open-source projects to train Copilot on billions of lines of open-source code.

That pissed off enough software engineers for them to make a big deal about it in the courts. The outcome of this case will be fascinating, but the court of public opinion is rampaging in the meantime.

It will not build you a new implementation […] It’s going to find an existing implementation, strip out comments, clean up naming and insert it into your codebase. Clearly illegal for humans but somehow OK if done on a massive scale?

A user of r/programming on how Copilot works

The above quote mischaracterizes what machine learning does and perpetuates the myth that generative AI “copies and pastes” the work of humans. It’s a pervasive misunderstanding of the tech—and this was on a forum intended for techy people.

Zooming out to the broader conversation about AI—and especially about AI-generated art—it becomes apparent that many of the most opinionated among us don’t know how these systems work.

To be clear, I’m not outright dismissing the claims of ethics violations regarding using art to train an AI model. These claims are based on genuine concerns and affect real people, so I want to dig into them. However, we cannot have an informed discussion until we know what the fuck is going on inside these machine-learning models in the first place.

What the Fuck is Going On Inside These Machine-Learning Models In the First Place?

One of the first things we do when casually discussing AI-generated art is we personify the algorithm. We’re humans attempting to explain complex things, and personification is a simple crutch that does a lot of heavy lifting with fewer words.

For example, we might say, “The AI analyzed millions of images and used your text prompt to draw a picture based on what it learned about art.” Because we know machine learning models aren’t sentient (yet), that statement makes us think of a program rapidly sorting a database of images, using an algorithm to select the parts that look like what you asked for, and mashing them together into a collage of results—the program copies artists!

It sounds like theft.

However, that’s different from how these programs actually work.

How Machine Learning Models Work

The process may differ between the popular DALL-E, Midjourney, Stable Diffusion, and other models used today, but none of them copy-and-paste from a database.

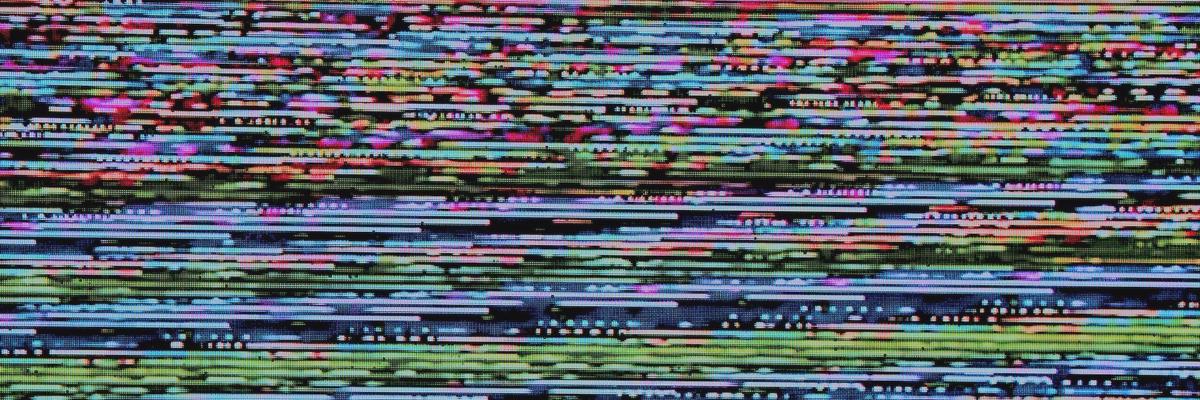

Let’s begin with how Stable Diffusion works. The process starts with noise.

Computer, Show Me a Photo of a Hotdog

Every time you prompt the model to generate a picture, the image begins life as a random mess of pixels—like an old TV with no signal. Kinda like this:

So how does the system convert this mess into a hotdog?

Since the program has analyzed millions of pictures of hotdogs from the internet, it has a pretty good idea of the typical hotdog color. For the sake of this explanation, I’m going to say a hotdog is ochre, but the model doesn’t make high-level decisions like this.

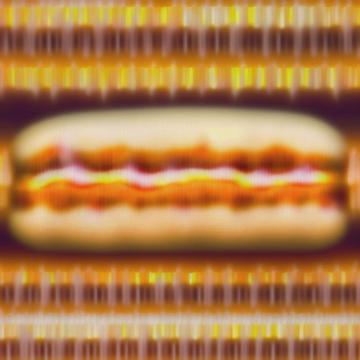

It analyzes this mess of pixels to make tiny changes. Pixel-by-pixel, it shifts the random colors towards something more like the color ochre. Most photos of hotdogs frame the tubular treat in the center, wrapped in a bun, so the algo starts changing some pixels to include a bun. As it moves from pixel to pixel, it changes them closer to the most probable color that would make a good picture of a hotdog.

After the first pass, the picture still looks like a mess—it only made tiny changes to the noise, and the result does not look like a hotdog. So, the algo does the whole process again, changing the new image to look more like all the hotdog images it has seen.

After many repetitions, the random colors have formed a hotdog-and-bun-colored soup roughly in the shape of most hotdogs. This blurry, swirling mess almost looks like a hotdog, but the model didn’t copy a real picture of a particular hotdog to get this far.

It used probabilities, pixel-by-pixel to form the image. The model will continue this process (called “denoising” or “reverse diffusion”) again and again until the output looks more like a hotdog.

The prompt I used to generate these hotdogs was “a hotdog with mustard on a bun, as part of an advertisement for a hotdog vendor.” I used Midjourney rather than Stable Diffusion because I have the Midjourney discord bot open, but the image generation process is similar for both models.

Cool. We Generated a Mid Picture of a Hotdog From Random Noise.

I told several lies in the above explainer.

Don’t get me wrong; the general concept is very much the way I described it, but the actual process is far more interesting. If you want to take a deep dive, check out the article, “How does Stable Diffusion work?”

I’ll summarize what’s happening below for everyone else, but feel free to skip it if it’s too far into the weeds for you. As long as you understand that images are not copied but created iteratively, one pixel at a time, you’ll have no trouble grokking the rest of the article.

How Stable Diffusion Actually Works

I said above that everything starts with noise, but I was getting ahead of myself. The training begins with vast quantities of images. These images are corrupted with noise in measured steps until the resulting images are completely random.

Adding noise to an image in this way is called forward diffusion.

During this process, a neural network trains to estimate the amount of noise added in each step. The result of this training is a neural predictor that can estimate the amount and position of noise added to any image.

I Put My Thing Down, Flip It, and Reverse It

Kinda like Missy Elliot in the early 2000s, the next step is to reverse the process.

- Start with completely random noise—not from one of the noisy images used in the training; we’re talking about a brand new random image.

- Ask the predictor to estimate the added noise. You and I both know that the honest answer is 100% noise, but the predictor thinks it’s working with a systematically corrupted image that happened over many steps, like the ones on which it trained.

- This is where the magic happens. We subtract the estimated noise from the random image. Then we repeat steps 2 and 3 until all noise is removed, resulting in a clear picture!

That’s how all of these diffusion models work. We give the model some random noise, tell it that this used to be a clear picture of a hotdog, and ask it to remove some noise from the image.

The problem with the above process is that it is expensive. DALL-E runs on far more powerful and costly hardware than your average laptop.

Stable Diffusion changed the game by taking the process a step further. It encodes the images down into something called “latent space,” which is many times smaller than the original noise image, runs the noise prediction removal process from that, and then decodes the result from latent space into a clear picture.

Again, if that sounds interestsing to you, go check out this article.

The Ethics of AI-Generated Art Are Convoluted

The arguments against using AI-generated art fall into several broad categories, each with nuance. Some of these arguments are misguided, but others are valid concerns we should discuss.

AI Art Is Theft

The “AI Art Is Theft” movement is more complex than it sounds. On the one hand, there needs to be more understanding of how these models work. For example, one of the “telltale signs” that AI is simply copying and pasting snippets from real artists is the presence of ghostly watermarks or garbled signatures in the output.

Copied & Poorly Covered Up

Seeing a Lensa portrait where what was clearly once an artist’s signature is visible in the bottom right and people are still trying to argue it isn’t theft. 🙃

— Lauryn Ipsum (@LaurynIpsum) December 6, 2022

I understand how this looks. Without knowing how these models work, it does seem like they are blatantly copying artists when these artifacts are in the output. However, the models are just tools with no understanding of what they generate. Many works of art contain signatures. The models think adding those little squiggly lines is much like adding a branch to a tree (for example).

The program didn’t copy an artist’s signature and then poorly attempt to hide it; instead, it tried to faithfully produce what we humans trained it to understand as “art”—including the concept of a signature. The flaw here lies in training.

Stealing Art to Feed The Machine

What I hate about AI art is not that I fear for my job or that I‘m afraid of change. I hate that it is art theft taken to the extreme. Using other people’s years of work to feed it into an algorithm so you can generate something similar in a few seconds is just so morally foul. https://t.co/5u3emVOVQf

— VERTI (@VERTIGRIS_ART) August 14, 2022

This powerful argument covers more ground than the simple phrase, “it is art theft.” I’ll dig into the moral implications of AI-generated art later in this article, but I want to stick to the concept of theft here.

A classic rebuttal to the argument that “using art in training data is theft” goes like this:

Referencing other artists’ works is not theft. It’s how we learn to be artists. If this was theft, no one could create derivative works or things influenced by those who came before.

Tech Bros, mostly.

It’s a compelling rebuttal, but does it hold water? How about a thought experiment:

Joel loves the work and style of contemporary artist Zeng Fanzhi, but he could never hope to afford one of Fanzhi’s paintings. So, Joel hires a competent human artist to research Zeng Fanzhi and create a new work in the artist’s style. Joel pays the new artist for the new painting.

When Joel shows off the work to his friends, he doesn’t claim that Zeng Fanzhi made the painting; he tells them that he just really likes the style. Zeng Fanzhi is unaware of this derived work, did not consent to the artist who painted the job, and did not receive compensation for the transaction.

Was this theft?

I argue that it is not. You cannot copyright style. Artists are free to do their works regardless of how close they mimic your style.

You may think this hypothetical experiment is not the same as using an AI model to generate an image in the style of Zeng Fanzhi. Is that because the tool is too easy? Is it because the tool lacks human intuition? That leads us to the following few arguments.

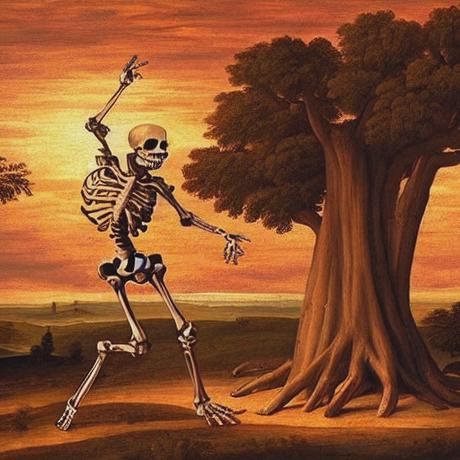

AI Art Isn’t Real Art

I’ll always remember the first time I saw the above image. In 2022, Jason Allen generated this image using Midjourney before submitting it to the Colorado State Fair art competition, where it took first place in the digital category.

Competition is core to the human experience. We expect professional athletes, chess grandmasters, world series of poker players, and e-sports contenders to compete unassisted. It’s the whole point.

I don’t care how you want to spin it; Allen tricked people. He says he submitted the art as “Jason Allen via Midjourney,” but there’s no way the judges could have known that Allen did nothing but type out a prompt. It was disingenuous at best.

Competitive trickery aside, is it art? I think it’s beautiful. It’s magnificent. It’s art to me.

Art Requires Human Intuition—It Requires Soul

It can be terrifying to realize that computers can suddenly do what was previously a human-only affair. It can feel downright existential. By that same token, most AI art from the current machine learning models lack something important—some “spark” of intent that delineates the difference between good art and mindless junk.

However, it’s presumptuous and maybe a bit pretentious to claim that “real art” requires human intention.

What about photography? When a photographer stumbles on a secluded beach, where a small wooden boat is nestled on the dunes at sunset, and they snap the perfect angle: did they create any of that, or were they just lucky to be in the right place at the right time? Is it art? I think so, but not because the photographer imparted a human intention upon the world; they were simply a witness.

It takes great skill to find and take these shots. But did they create the art, or did mother nature do it?

AI-Generated Art Cannot Be Copyrighted

Copyright law only protects ’the fruits of intellectual labor’ that ‘are founded in the creative powers of the [human] mind.’ […] The Office will not register works ‘produced by a machine or mere mechanical process’ that operates ‘without any creative input or intervention from a human author’ because, under the statute, ‘a work must be created by a human being’

That’s an unequivocal stance by the U.S. Copyright Office. According to that authority, they cannot afford copyright protections for AI-generated art. That makes a lot of sense, but be careful not to apply this reasoning to the broader question of whether or not these images are “actually art.”

Providing legal protection to something a machine created doesn’t make much sense, but it also doesn’t invalidate whether or not something is art.

AI Will Eliminate Creative Jobs

Won’t someone think of the starving artists?

If you're not creative enough to figure out how to use machines to make Art, maybe you're not really an artist.

— ProfessorWise.eth (@0xAndrewWise) September 13, 2022

The above Twitter exchange is perfectly infuriating.

One side is full of pearl-clutching shock that the art apocalypse is right around the corner (possibly based on legitimate fear). On the other side are derision and a complete lack of empathy from a crypto bro who’s all-in on NFTs (probably based on scoring internet points).

I’m reminded of the promise of self-driving cars.

Jobs In The Trucking Industry Are Doomed

Self-driving cars are inevitable—at least, that’s what the industry keeps promising. The technology is far from ready, but I firmly believe adoption will transpire within our lifetime—maybe within the current decade.

Most of us like to think about self-driving cars as a luxury or a convenience, but there’s one industry ripe for disruption, and very few people are talking about it: trucking.

Put yourself in the shoes of a trucking company. Your number-one liability is your workforce. Your drivers need to sleep; they need health insurance; they need a living wage. A fancy new self-driving semi-truck might be expensive, up-front, but that truck can drive 24 hours a day, with no healthcare requirements and no need for a paycheck.

A quick look at the U.S. Bureau of Labor Statistics shows that there are approximately 2 million heavy and tractor-trailer truck driving jobs in the United States. And that’s just the big boys; what about delivery trucks? Moving vans? Mail? Taxis?

Suffice it to say that many hardworking drivers will soon wake up and find themselves out of a job, and no one is hiring.

Does that mean that we should stop developing self-driving technology? What about the supposed benefits:

- 94% of crashes are due to human error. If self-driving cars can eliminate human error, think of the lives that could be saved.

- Increased quality of life, mobility, accessibility, and travel, especially for the disabled and elderly.

- Self-driving cars can communicate with each other, providing the opportunity to reduce traffic congestion.

There are more pros and cons, but that’s enough of a diversion from the main topic.

So, is AI coming for our creative jobs? The ramifications of AI-generated content on people’s jobs and lives stretch beyond the limited scope of visual art. I recently watched an interview between Rick Beato and Tim Henson of Polyphia, where Tim said:

I’m pretty sure that here in the next few months—the next year or next few months—we’re gonna have riff generators that can generate Polyphia-type riffs […] everybody’s curious as to whether or not they’re out of a job. […] I see copywriters no longer have a job because the AI can just generate copy.

He followed up with an interesting analogy:

In the ’70s and ‘80s, when hip-hop kinda started and people were sampling records, I could imagine a vast majority of music people—as it was a new form of creation and expression—were kind of like, well, you can’t do that. That’s somebody else’s music! And now it’s like, well, how creative can you sample? You know what I mean? Pretty soon, it’s going to be, how creative can you use the AI. The next big artists are going to be who can prompt the best.

Tim Henson, December 2022

Change is the only constant in life, and change scares people. There will always be a market for truly talented artists. Fast food didn’t kill fine dining any more than AI will kill all creative expression. In the meantime, people will use AI to augment and sometimes replace traditionally creative roles. That’s a bummer, but it’s reality.

Artists Did Not Consent To AI Training.

If we steal thoughts from the moderns, it will be cried down as plagiarism; if from the ancients, it will be cried up as erudition.

This one is a big deal. All of the fears and concerns of the previous arguments feed into this one. It has become the go-to attack on AI-generated art.

This position claims that the companies who built the models violated copyright law by scraping artists’ work from the internet without their consent. The same law firm that is suing Microsoft for their Copilot implementation is now suing Midjourney, Stability AI, and DeviantArt with allegations that the entities have infringed on the rights of millions of artists by training their tools on billions of images from the web without the artists’ consent.

Unfortunately for the plaintiffs, the complaint [pdf] is riddled with technical inaccuracies that should be easily dismantled by expert testimony if it ever comes to that. For example, they claim, “By training Stable Diffusion on the Training Images, Stability caused those images to be stored at and incorporated into Stable Diffusion as compressed copies.” As I’ve illustrated above, this is completely inaccurate.

I think Andres Guadamuz of TechoLlama has a pretty interesting take on the suit:

The other problematic issue in the complaint is the claim that all resulting images are necessarily derivatives of the five billion images used to train the model. I’m not sure if I like the implications of such level of dilution of liability, this is like homeopathy copyright, any trace of a work in the training data will result in a liable derivative. That way madness lies.

Andres Guadamuz, TechnoLlama

The Morality of Training Without Consent

Let’s put ourselves in the shoes of an accomplished artist. Making art is our livelihood. We post our art online to attract new business and thrive in this environment.

If a person were to study our art and practice until they could replicate our style and proficiency, there’s little we could do to stop it, and we probably wouldn’t care anyway. Hell, we’d probably celebrate that person’s efforts. They aren’t likely to knock us out of our defensible, established position.

Now let’s look at AI. A for-profit company creates software that can do almost exactly what we can do. Anyone can use the software to generate art indistinguishable from our own—and they can do it in seconds.

Why would anyone hire us? Sure, there may be a few patrons of the arts who dislike the fact that AI has supplanted artists, and they may only employ human artists as a matter of sticking to their ethics—but the bulk of the market will use the free or astonishingly cheap tool and never give us a call in the first place.

And the worst part: the company used all of the art we posted online for the software to achieve the same level of competency. Without us, the software as written couldn’t compete with us.

The technology isn’t there yet, but it’s damned close. There’s a frustrating analogy that proponents of AI-generated art use when discussing these topics:

Humans can study your craft and do the same thing. AI models are no different.

Guy who loves AI art

It may be ontologically similar, but it’s not really the same, is it? The outcomes certainly aren’t. I can see why this is concerning. It’s certainly ethically and morally dubious if the goal is to monetize the artistic effort of someone else.

The Future Was Yesterday

The train has left the station, the cat is out of the bag, and pandora’s box is open.

When I was a kid, the internet as we know it didn’t exist. TCP/IP was adopted by ARPANET a few years after I was born. Now we have deep fakes streaming instantly through the cloud to the supercomputer I carry around in my pocket. The speed at which humankind is advancing technology is breathtaking, and it’s only going to get faster from here; ethics sometimes be damned.

Do we accept the exploitation of millions of artists’ efforts for the broader reach of humankind? What if one of these companies figures out how to make a model that doesn’t require other artists’ work for training. Do we make that illegal, too?

Adapting to this reality will define who we are and what we cherish as individuals and as a species.

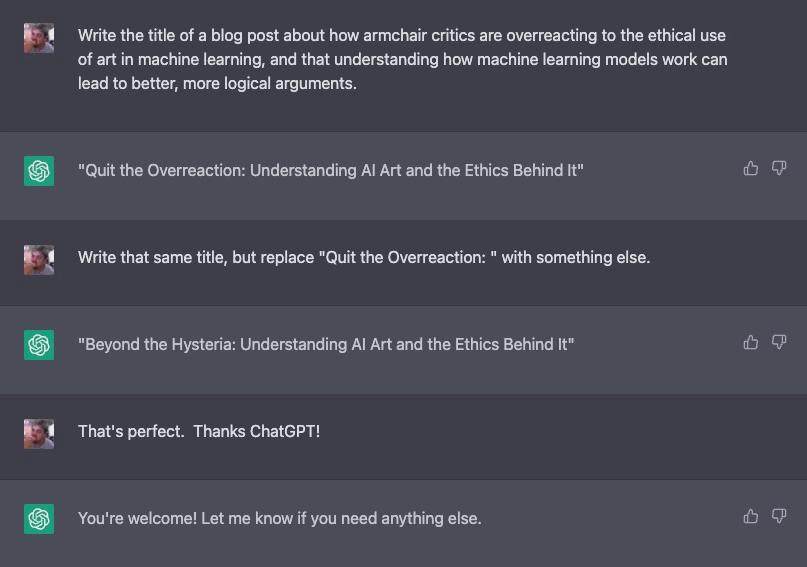

Fun little anecdote before I leave: In the spirit of trying new things, I decided to let ChatGPT write the title of this post.

I think it did a good job. Also, remember to be nice to your tools, and always thank the machine.

It builds character. ;)